Why Open-Source is the Lifeline for Low-Resourced Languages in the AI Era

In the rapidly evolving landscape of artificial intelligence and language technology, a digital divide continues to widen. While major languages flourish with abundant resources and technological support, thousands of languages spoken by millions remain in the shadows of the digital revolution. This disparity isn’t just a technological issue—it’s a matter of cultural preservation, accessibility, and equity in the digital age.

The New Reality of Language Technology

The emergence of Large Language Models (LLMs) has fundamentally transformed how language technologies are developed and deployed. These powerful AI systems, trained on vast amounts of text data from across the internet, have become the new foundation for nearly all language-based applications—from translation to content creation.

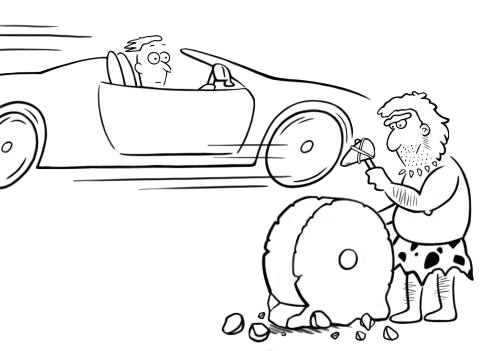

This shift creates a paradox for low-resourced languages. Traditional approaches often involved specialized companies developing dedicated solutions for specific languages, with proprietary datasets as their competitive advantage. But this model is rapidly becoming obsolete.

The Economic Sea Change

Consider the case of a startup developing translation services for a low-resourced language. Previously, such a company might have collected proprietary data, built specialized models, and offered their services at a premium. This approach made economic sense in a world of specialized language tools.

Today, the landscape looks dramatically different. Large language models are continually crawling the web, ingesting any available content regardless of language. These models are being developed by organizations with resources that individual startups or language communities simply cannot match. The result? Any language with sufficient open data available online has a better chance of being represented in these powerful systems than languages with small, fragmented, proprietary datasets.

Why Hoarding Data No Longer Makes Sense

Let’s be clear: if your primary goal is to advance your language’s digital presence, keeping your language data locked away is now counterproductive. Here’s why:

- Scale matters more than ever. LLMs thrive on massive amounts of data. A small proprietary dataset cannot compete with the collective power of open resources.

- Network effects benefit open resources. When language data is openly available, it attracts more researchers, developers, and speakers to contribute, creating a virtuous cycle of improvement.

- Fragmentation hurts everyone. Multiple small, isolated datasets create redundant efforts and prevent the critical mass needed for meaningful technological progress.

The Open-Source Imperative

For low-resourced language communities, the path forward is clear: embracing open-source principles isn’t just beneficial—it’s essential for survival in the digital age. By making speech, text, and other linguistic resources openly available under permissive licenses, these communities can:

- Ensure their languages are represented in the next generation of AI systems

- Leverage the research and development efforts of the global AI community

- Create a foundation for sustainable digital presence without reinventing the wheel

- Foster collaboration rather than competition among those working on the same language

Success Stories Worth Noting

We’re already seeing the impact of this approach. The Masakhane initiative in Africa has brought together researchers across the continent to develop NLP tools for African languages through open collaboration. The Common Voice project has collected thousands of hours of voice data across numerous languages, making speech technology more accessible for previously underserved communities.

These projects demonstrate that collective, open efforts create far greater impact than isolated commercial ventures ever could in today’s AI landscape.

The Way Forward

If you’re working with a low-resourced language, consider this a call to action:

- Release your existing language data under permissive licenses like CC-BY or MIT

- Join forces with others working on your language rather than competing

- Contribute to multilingual open-source initiatives that include your language

- Advocate for funding models that support open language resources rather than proprietary ones

The future of low-resourced languages in the digital world depends not on who can monetize language data most effectively, but on who can collaborate most openly and efficiently. In an era where the giants of AI are constantly harvesting the web for training data, visibility and openness are the true currency for linguistic inclusion.

Your language’s digital future may well depend on this fundamental shift in thinking: from data as a private asset to data as a public good that elevates the entire language community.